LLM_API

LLM API

The Badiu-AI Studio LLM API is a set of foundational LLM API services provided to developers, supported by the Baidu AI Cloud Qianfan Platform, and offers capabilities of LLMs such as ERNIE models. This LLM API service is compatible with the openai-python SDK, allowing developers to directly use the native openai-python SDK to call ERNIE and other LLM services.

Join the official free tutorial course now 《LLM API Service: From Service Calls to Application Practice》, get started easily in 2 minutes, and master large models.

1. Preparation

1.1 Access Token

Access Token is used for AI Studio user authentication. It allows executing specific operations specified by the authorization scope (such as LLM API call permissions, repository read access permissions, etc.) towards AI Studio via the access token. You can go to the Access Token Page in your personal center to view your exclusive access token.

1.2 Tokens

Tokens are the basic unit of measurement for calling large model SDKs or using large model applications on the Baidu AI Studio. AI Studio provides each developer with a free quota of 1 million Tokens. Developers will be charged a different number of Tokens for using different models. You can check the Token Management to view the usage details. If Tokens are used up, you can Buy Tokens before using again.

1.3 Service Domain

The domain address for the Badiu-AI Studio LLM API service is: https://aistudio.baidu.com/llm/lmapi/v3

When using openai-python to call the Badiu-AI Studio LLM API service, you need to set:

- Specify api_key = "Your Access Token"

- Specify base_url = "https://aistudio.baidu.com/llm/lmapi/v3"

2. Model List and Query

2.1 Text-to-Text Model List

| Model Name | model Parameter Value | Context Length (token) | Max Input (token) | Max Output (token) |

|---|---|---|---|---|

| (Open Sourced on 6/30) ERNIE-4.5-VL-424B-A47B | ernie-4.5-turbo-vl | 128k | 123k | [2, 12288] Default 2k |

| (Open Sourced on 6/30) ERNIE-4.5-300B-A47B | ernie-4.5-turbo-128k-preview | 128k | 123k | [2, 12288] Default 2k |

| (Open Sourced on 6/30) ERNIE-4.5-VL-28B-A3B | ernie-4.5-vl-28b-a3b | 128k | 123k | [2, 12288] Default 2k |

| (Open Sourced on 6/30) ERNIE-4.5-21B-A3B | ernie-4.5-21b-a3b | 128k | 120k | [2, 12288] Default 2k |

| (Open Sourced on 6/30) ERNIE-4.5-0.3B | ernie-4.5-0.3b | 128k | 120k | [2, 12288] Default 2k |

| DeepSeek-Chat | deepseek-v3 | 128k | 128k | [2, 12288] Default 2k |

| ERNIE 4.0 | ernie-4.0-8k | 8k | 5k | [2, 2048] Default 2k |

| ERNIE 4.0 Turbo | ernie-4.0-turbo-128k | 128k | 124k | [2, 4096] Default 4k |

| ERNIE 4.0 Turbo | ernie-4.0-turbo-8k | 8k | 5k | [2, 2048] Default 2k |

| ERNIE 3.5 | ernie-3.5-8k | 8k | 5k | [2, 2048] Default 2k |

| ERNIE Character | ernie-char-8k | 8k | 7k | [2, 2048] Default 1k |

| ERNIE Speed | ernie-speed-8k | 8k | 6k | [2, 2048] Default 1k |

| ERNIE Speed | ernie-speed-128k | 128k | 124k | [2, 4096] Default 4k |

| ERNIE Tiny | ernie-tiny-8k | 8k | 6k | [2, 2048] Default 1k |

| ERNIE Lite | ernie-lite-8k | 8k | 6k | [2, 2048] Default 1k |

| Kimi-K2 | kimi-k2-instruct | 128k | 128k | [1, 32768] Default 4k |

| Qwen3-Coder | qwen3-coder-30b-a3b-instruct | 128k | 128k | [1, 32768] Default 4k |

2.2 Thinking Model List

| Model Name | model Parameter Value | Context Length (token) | Max Input (token) | Max Output (token) |

Chain of Thought Length (token) |

|---|---|---|---|---|---|

| (Open Sourced on 6/30) ERNIE-4.5-VL-424B-A47B | ernie-4.5-turbo-vl | 128k | 123k | [2, 12288] Default 2k |

16k |

| (Open Sourced on 6/30) ERNIE-4.5-VL-28B-A3B | ernie-4.5-vl-28b-a3b | 128k | 123k | [2, 12288] Default 2k |

16k |

| ERNIE X1 Turbo | ernie-x1-turbo-32k | 32k | 24k | [2, 16384] Default 2k |

16k |

| DeepSeek-Reasoner | deepseek-r1-250528 | 96k | 64k | 16k Default 4k |

32k |

| DeepSeek-Reasoner | deepseek-r1 | 96k | 64k | 16k Default 4k |

32k |

2.3 Multimodal Model List

For multimodal model usage, please see section 5.8 of this document. (Added 2025/6/30: Video understanding call example, section 5.8.6)

| Model Name | model Parameter Value | Supported Modalities | Context Length (token) | Max Input (token) | Max Output (token) |

|---|---|---|---|---|---|

| (Open Sourced on 6/30) ERNIE-4.5-VL-424B-A47B | ernie-4.5-turbo-vl | Text, Image, Video | 128K | 123K | [2, 12288] |

| (Open Sourced on 6/30) ERNIE-4.5-VL-28B-A3B | ernie-4.5-vl-28b-a3b | Text, Image, Video | 128k | 123K | [2, 12288] |

| ERNIE 4.5 Turbo VL | ernie-4.5-turbo-vl-32k | Text, Image | 32k | 30k | [1, 8192] Default 4k |

2.4 Embedding Model List

| Model Name | model Parameter | Max Input Text Count | Context Length per Text (token) |

|---|---|---|---|

| Embedding-V1 | embedding-v1 | 1 | 384 |

| bge-large-zh | bge-large-zh | 16 | 512 |

2.5 Text-to-Image Model

| Model Name | Type |

|---|---|

| Stable-Diffusion-XL | Text-to-Image Model |

2.6 Feature Support

2025/6/30 Open Source Model List:

| Model Name | model Parameter Value | Supported Capabilities | Supported Modalities |

|---|---|---|---|

| (Open Sourced on 6/30) ERNIE-4.5-VL-424B-A47B | ernie-4.5-turbo-vl | Chat Model Thinking (Coming Soon) |

Text Image Video |

| (Open Sourced on 6/30) ERNIE-4.5-300B-A47B | ernie-4.5-turbo-128k-preview | Chat Model | Text |

| (Open Sourced on 6/30) ERNIE-4.5-VL-28B-A3B | ernie-4.5-vl-28b-a3b | Chat Model Thinking |

Text Image Video (Coming Soon) |

| (Open Sourced on 6/30) ERNIE-4.5-21B-A3B | ernie-4.5-21b-a3b | Chat Model | Text |

| (Open Sourced on 6/30) ERNIE-4.5-0.3B | ernie-4.5-0.3b | Chat Model | Text |

Web Search (Search Enhancement):

- ernie-4.5

- ernie-4.5-turbo

- ernie-4.0

- ernie-4.0-turbo

- ernie-3.5

- deepseek-r1

- deepseek-v3

function call:

- ernie-x1-turbo-32k

- deepseek-r1

- deepseek-v3

Structured Output:

- ernie-4.5

- ernie-4.0-turbo

- ernie-3.5

2.7 Query Model List

# Query the list of supported models

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio Access Token, [https://aistudio.baidu.com/account/accessToken](https://aistudio.baidu.com/account/accessToken),

base_url="[https://aistudio.baidu.com/llm/lmapi/v3](https://aistudio.baidu.com/llm/lmapi/v3)", # aistudio LLM api service domain

)

models = client.models.list()

for model in models.data:

print(model.id)3. Install Dependencies

# install from PyPI

pip install openai4. Basic Model Capability Usage

4.1 Text-to-Text

4.1.1 Model Use

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

chat_completion = client.chat.completions.create(

messages=[

{'role': 'system', 'content': 'You are a developer assistant for the AI Studio training platform. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.'},

{'role': 'user', 'content': 'Hello, please introduce AI Studio'}

],

model="ernie-3.5-8k",

)

print(chat_completion.choices[0].message.content)To avoid exposing the api_key in the code, you can use python-dotenv to add AI_STUDIO_API_KEY="YOUR_ACCESS_TOKEN" to your .env file. Of course, you can also specify it directly via api_key="YOUR_ACCESS_TOKEN"

4.1.2 Request Parameter Description

body Description

| Name | Type | Required | Description | Natively supported by openai-python |

|---|---|---|---|---|

| model | string | Yes | Model ID, available values can be obtained from client.models.list() |

Yes |

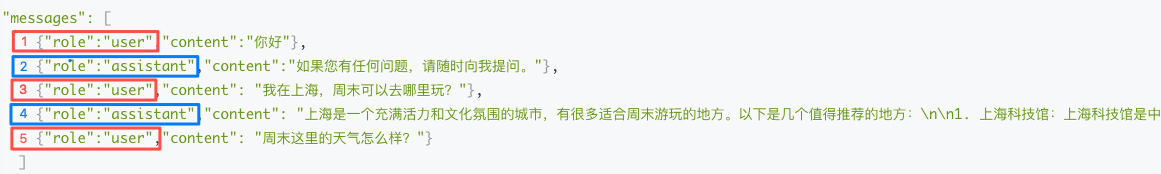

| messages | List | Yes | Chat context information. Description: (1) messages members cannot be empty. 1 member means a single-turn dialogue, multiple members mean a multi-turn dialogue, for example: 1 member example, "messages": [ {"role": "user","content": "Hello"}] 3 members example, "messages": [ {"role": "user","content": "Hello"},{"role":"assistant","content":"What help do you need"},{"role":"user","content":"Introduce yourself"}] (2) The last message is the current request information, the previous messages are historical dialogue information (3) Description of roles in messages: ① The role of the first message must be user or system ② The role of the last message must be user or tool. If it is ERNIE 4.5 or ERNIE-X1-32K-Preview, the role of the last message must be user ③ If function call is not used: · When the role of the first message is user, the role values need to be in the order user -> assistant -> user..., i.e., the role value of odd-numbered messages must be user or function, and the role value of even-numbered messages must be assistant, for example: in the example message, the role values are user, assistant, user, assistant, user; the role value of odd-numbered (red box) messages is user, i.e., the role value of the 1st, 3rd, 5th messages is user; the value of even-numbered (blue box) is assistant, i.e., the role value of the 2nd, 4th messages is assistant  · When the role of the first message is system, the role values need to be in the order system -> user/function -> assistant -> user/function ... (4) The total length of content in messages cannot exceed the input character limit and input token limit of the corresponding model, please check Context Length Description for Each Model (5) If it is ERNIE 4.5, please refer to the following: consecutive user/assistant and starting message as assistant are not supported. The specific rules are as follows: · messages members cannot be empty, 1 member means single-turn dialogue, multiple members mean multi-turn dialogue; · The role of the first message must be user or system · The role of the last message must be user · After removing the first system role, the roles need to be in the order user -> assistant -> user ... |

Yes |

| stream | bool | No | Whether to return data in the form of a streaming interface, Description: (1) Can only be false for beam search models (2) Default is false |

Yes |

| temperature | float | No | Description: (1) Higher values make the output more random, while lower values make it more focused and deterministic (2) Default 0.95, range (0, 1.0], cannot be 0 (3) Not supported by the following models: · deepSeek-v3 · deepSeek-r1 · ernie-x1-32k-preview |

Yes |

| top_p | float | No | Description: (1) Affects the diversity of the output text. The larger the value, the stronger the diversity of the generated text (2) Default 0.7, value range [0, 1.0] (3) Not supported by the following models: · deepSeek-v3 · deepSeek-r1 · ernie-x1-32k-preview |

Yes |

| penalty_score | float | No | Reduces the phenomenon of repeated generation by adding penalties to already generated tokens. Description: (1) The larger the value, the greater the penalty (2) Default 1.0, value range: [1.0, 2.0] (3) Not supported by the following models: · deepSeek-v3 · deepSeek-r1 · ernie-x1-32k-preview |

No |

| max_completion_tokens | int | No | Specify the maximum number of output tokens for the model, Description: (1) Value range [2, 2048], please check the supported model list for specific model support |

Yes |

| response_format | string | No | Specify the format of the response content, Description: (1) Optional values: · json_object: return in json format, may not meet expectations · text: return in text format (2) If the parameter response_format is not filled, the default is text (3) Not supported by the following models: ernie-x1-32k-preview |

Yes |

| seed | int | No | Description: (1) Value range: (0, 2147483647), will be randomly generated by the model, default is empty (2) If specified, the system will make a best effort for deterministic sampling, so that repeated requests with the same seed and parameters return the same result (3) Not supported by the following models: ernie-x1-32k-preview |

Yes |

| stop | List | No | Generation stop identifier. When the model's generated result ends with an element in stop, text generation stops. Description: (1) Each element's length should not exceed 20 characters (2) At most 4 elements (3) Not supported by the following models: ernie-x1-32k-preview |

Yes |

| frequency_penalty | float | No | Description: (1) Positive values penalize new tokens based on their existing frequency in the text so far, reducing the likelihood of the model repeating the same line verbatim (2) Value range: [-2.0, 2.0] (3) Supported by the following models: ernie-speed-8k、ernie-speed-128k 、ernie-tiny-8k、ernie-char-8k、ernie-lite-8k |

Yes |

| presence_penalty | float | No | Description: (1) Positive values penalize new tokens based on whether they appear in the text so far, increasing the likelihood of the model talking about new topics (2) Value range: [-2.0, 2.0] (3) Supported by the following models: ernie-speed-8k、ernie-speed-128k 、ernie-tiny-8k、ernie-char-8k、ernie-lite-8k |

Yes |

| tools | List(Tool) | No | A list of descriptions of functions that can be triggered. For supported models, please refer to the supported model list in this document - whether function call is supported | Yes |

| tool_choice | string / tool_choice | No | Description: (1) For supported models, please refer to the supported model list in this document - whether function call is supported (2) string type, optional values are as follows: · none: The model is not expected to call any function, only generate user-facing text messages · auto: The model will automatically decide whether to call functions and which functions to call based on the input content · required: The model is expected to always call one or more functions (3) When it is of type tool_choice, it means prompting the large model to select a specified function in a function call scenario. The specified function name must exist in tools |

Yes |

| parallel_tool_calls | bool | No | Description: (1) For supported models, please refer to the supported model list in this document - whether function call is supported (2) Optional values: · true: means enable parallel function calling, enabled by default · false: means disable parallel function calling |

Yes |

| web_search | web_search | No | Search enhancement options, Description: (1) Default is off (not passed) (2) For supported models, please see the model list description above |

No |

message Description

| Name | Type | Required | Description |

|---|---|---|---|

| role | string | Yes | Currently supports the following:user: represents the userassistant: represents the dialogue assistantsystem: represents the persona |

| name | string | No | message name |

| content | string | Yes | Dialogue content, Description: (1) Cannot be empty (2) The content corresponding to the last message cannot be blank characters, such as spaces, "\n", "\r", "\f", etc. |

Tool's function Description

The function description in Tool is as follows

| Name | Type | Required | Description |

|---|---|---|---|

| name | string | Yes | Function name |

| description | string | No | Function description |

| parameters | object | No | Function request parameters, in JSON Schema format, refer to JSON Schema Description |

tool_choice Description

| Name | Type | Required | Description |

|---|---|---|---|

| type | string | Yes | Specify the tool type, fixed value function |

| function | function | Yes | Specify the function to use |

tool_choice's function Description

| Name | Type | Required | Description |

|---|---|---|---|

| name | string | Yes | Specify the name of the function to use |

web_search Description

| Name | Type | Description |

|---|---|---|

| enable | bool | Whether to enable the real-time search function, Description: (1) If real-time search is disabled, superscript and traceability information will not be returned (2) Optional values:· true: enable · false: disable, default false |

| enable_citation | bool | Whether to enable superscript return, Description: (1) Takes effect when enable is true (2) Optional values: · true: enable; if enabled, in scenarios where search enhancement is triggered, the response content will include superscripts and the corresponding search traceability information for the superscripts · false: not enabled, default false (3) If the retrieved content includes non-public webpages, superscripts will not be effective |

| enable_trace | bool | Whether to return search traceability information, Description: (1) Takes effect when enable is true. (2) Optional values: · true: return; if true, in scenarios where search enhancement is triggered, search traceability information search_results will be returned · false: do not return, default false (3) If the retrieved content is a non-public webpage, traceability information will not be returned even if search is triggered |

4.1.3 Response Parameter Description

| Name | Type | Description |

|---|---|---|

| id | string | Unique identifier for this request, can be used for troubleshooting |

| object | string | Packet type chat.completion: multi-turn dialogue return |

| created | int | Timestamp |

| model | string | Model ID |

| choices | object | Description: The returned content differs when the request parameter stream value is different |

| usage | usage | Token statistics, Description: (1) Returned by default for synchronous requests (2) The actual content will be returned in the last chunk, other chunks return null |

choices Description

| Name | Type | Description |

|---|---|---|

| index | int | Sequence number in the choice list |

| message | message | Response information, returned when stream=false |

| delta | delta | Response information, returned when stream=true |

| finish_reason | string | Output content identifier, Description: normal: The output content is completely generated by the large model, without triggering truncation or replacement stop: The output result was truncated after hitting a specified field in the input parameter stoplength: Reached the maximum number of tokenscontent_filter: The output content was truncated, defaulted, replaced with **, etc.function_call: The function call feature was invoked |

| flag | int | Security subdivision type, Description: (1) When stream=false, the meaning of the flag value is as follows:0 or not returned: Safe1: Low-risk unsafe scenario, conversation can continue2: Chat prohibited: Conversation not allowed to continue, but content can be displayed3: Display prohibited: Conversation not allowed to continue and content cannot be displayed on screen4: Screen retraction (2) When stream=true, a returned flag indicates security was triggered |

| ban_round | int | When flag is not 0, this field indicates which round of dialogue contains sensitive information; if it is the current question, ban_round = -1 |

choices's message Description

| Name | Type | Description |

|---|---|---|

| role | string | Currently supports the following:· user: represents the user· assistant: represents the dialogue assistant· system: represents the persona |

| name | string | message name |

| content | string | Dialogue content |

| tool_calls | List[ToolCall] | Function call, returned in the first round of dialogue in a function call scenario, passed as historical information in the message in the second round |

| tool_call_id | string | Description:(1) This field is required when role=tool(2) The function call id generated by the model, corresponding to tool_calls[].id in tool_calls(3) The caller should pass the real id generated by the model, otherwise the effect will be compromised |

| reasoning_content | string | Chain of thought content, Note: Only valid when the model is DeepSeek-R1 |

delta Description

| Name | Type | Description |

|---|---|---|

| content | string | Streaming response content |

| tool_calls | List[ToolCall] | Function calls generated by the model, including function name and call parameters |

ToolCall Description

| Name | Type | Description |

|---|---|---|

| id | string | Unique identifier for the function call, generated by the model |

| type | string | Fixed value function |

| function | function | Specific content of the function call |

ToolCall's function Description

| Name | Type | Description |

|---|---|---|

| name | string | Function name |

| arguments | string | Function arguments |

search_results Description

| Name | Type | Description |

|---|---|---|

| index | int | Sequence number |

| url | string | Search result URL |

| title | string | Search result title |

usage Description

| Name | Type | Description |

|---|---|---|

| prompt_tokens | int | Number of question tokens (including historical Q&A) |

| completion_tokens | int | Number of answer tokens |

| total_tokens | int | Total number of tokens |

4.2 Text-to-Image

4.2.1 Model Use

import os

from openai import OpenAI

import base64

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

# Generated image returned as a URL

images_url = client.images.generate(prompt="A white cat, red hat", model="Stable-Diffusion-XL", response_format="url")

print(images_url.data[0].url)

# Generated image returned as base64

images_base64 = client.images.generate(prompt="A black cat, blue hat", model="Stable-Diffusion-XL", response_format="b64_json")

# Save the generated images

for i, image in enumerate(images_base64.data):

with open("image_{}.png".format(i), "wb") as f:

f.write(base64.b64decode(image.b64_json))4.2.2 Request Parameter Description

| Name | Type | Required | Description | Natively supported by openai-python |

|---|---|---|---|---|

| model | string | Yes | Model ID, available values can be obtained from client.models.list() |

Yes |

| prompt | string | Yes | Prompt, i.e., the elements the user wants the image to contain. Description: Length limit 1024 characters, recommended total number of Chinese or English words not to exceed 150 | Yes |

| negative_prompt | string | No | Negative prompt, i.e., the elements the user does not want the image to contain. Description: Length limit 1024 characters, recommended total number of Chinese or English words not to exceed 150 | No |

| response_format | string | No | The format for the returned generated image. Must be one of url or b64_json. After image generation, the url is valid for 7 days. |

Yes |

| size | string | No | Generated image width and height, Description: (1) Default value 1024x1024 (2) Value range as follows: Suitable for avatars: ["768x768", "1024x1024", "1536x1536", "2048x2048"] Suitable for article illustrations: ["1024x768", "2048x1536"] Suitable for posters/flyers: ["768x1024", "1536x2048", "576x1024", "1152x2048"] Suitable for computer wallpapers: ["1024x576", "2048x1152"] | Yes |

| n | int | No | Number of images to generate, Description: (1) Default value is 1 (2) Value range is 1-4 (3) Generating many images at once or frequent requests may lead to request timeout | Yes |

| steps | int | No | Number of iterations, Description: Default value is 20 Value range is [10-50] | No |

| style | string | No | Generation style. Description: (1) Default value is Base (2) Optional values: Base: Basic style 3D Model: 3D Model Analog Film: Analog Film Anime: Anime Cinematic: Cinematic Comic Book: Comic Book Craft Clay: Craft Clay Digital Art: Digital Art Enhance: Enhance Fantasy Art: Fantasy Art Isometric: Isometric Line Art: Line Art Lowpoly: Lowpoly Neonpunk: Neonpunk Origami: Origami Photographic: Photographic Pixel Art: Pixel Art Texture: Texture | Yes |

| sampler_index | string | No | Sampling method, Description: (1) Default value: Euler a (2) Optional values as follows: Euler Euler a DPM++ 2M DPM++ 2M Karras LMS Karras DPM++ SDE DPM++ SDE Karras DPM2 a Karras Heun DPM++ 2M SDE DPM++ 2M SDE Karras DPM2 DPM2 Karras DPM2 a LMS | No |

| retry_count | int | No | Number of retries, default 1 | No |

| request_timeout | float | No | Request timeout, default 60 seconds | No |

| backoff_factor | float | No | Request retry parameter, used to specify the retry strategy, default is 0 | No |

| seed | integer | No | Random seed, Description:If not set, a random number is automatically generated Value range [0, 4294967295] | No |

| cfg_scale | float | No | Prompt relevance, Description: Default value is 5, value range 0-30 | No |

4.2.3 Model Response Description

| Name | Type | Description |

|---|---|---|

| created | int | Timestamp |

| data | list(image) | Generated image result |

image Description

| Name | Type | Description |

|---|---|---|

| b64_json | string | Image base64 encoded content, if and only if response_format=b64_json |

| url | string | Image URL, if and only if response_format=url |

| index | int | Sequence number |

4.3 Embeddings

4.3.1 Model Use

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

embeddings = client.embeddings.create(

model="embedding-v1",

input=[

"Recommend some food",

"Tell me a story"

]

)

print(embeddings)4.3.2 Request Parameter Description

| Name | Type | Required | Description |

|---|---|---|---|

| model | str | No | Model ID, available values can be obtained from client.models.list() |

| Input | List[str] | Yes | Input text, Description: (1) Cannot be an empty List, each member of the List cannot be an empty string (2) Number of texts cannot exceed 16 (3) Description: embedding-v1: Number of texts cannot exceed 16, each text's token count cannot exceed 384 and length cannot exceed 1000 characters bge-large-zh: Number of texts cannot exceed 16, each text's token count cannot exceed 512 and length cannot exceed 2000 characters |

4.3.3 Return Parameter Description

| Name | Type | Description |

|---|---|---|

| object | str | Packet type, fixed value "embedding_list" |

| data | List[EmbeddingData] | embedding information, number of data members matches the number of texts |

| usage | Usage | token statistics, token count = number of Chinese characters + number of words*1.3 (estimation logic only) |

EmbeddingData Description

| Name | Type | Description |

|---|---|---|

| object | str | Fixed value "embedding" |

| embedding | List[float] | embedding content |

| index | int | Sequence number |

Usage Description

| Name | Type | Description |

|---|---|---|

| prompt_tokens | int | Question tokens count |

| total_tokens | int | Total tokens count |

5.Model Extension Capability Usage

5.1 Multi-Turn Dialogue

import os

from openai import OpenAI

def get_response(messages):

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(model="ernie-3.5-8k", messages=messages)

return completion

messages = [

{

"role": "system",

"content": "You are an AI Studio developer assistant. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.",

}

]

assistant_output = "Hello, I am the AI Studio developer assistant. How can I help you?"

print(f"""Input: "End" to end the conversation\n""")

print(f"Model output: {assistant_output}\n")

user_input = ""

while "End" not in user_input:

user_input = input("Please enter: ")

# Add user's question to the messages list

messages.append({"role": "user", "content": user_input})

assistant_output = get_response(messages).choices[0].message.content

# Add the model's reply to the messages list

messages.append({"role": "assistant", "content": assistant_output})

print(f"Model output: {assistant_output}")

print("\n")5.2 Streaming Output

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-3.5-8k",

messages=[

{'role': 'system', 'content': 'You are a developer assistant for the AI Studio training platform. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.'},

{'role': 'user', 'content': 'Hello, please introduce AI Studio'}

],

stream=True,

)

for chunk in completion:

print(chunk.choices[0].delta.content or "", end="")5.3 Asynchronous Use

import os

from openai import AsyncOpenAI

import asyncio

client = AsyncOpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

async def main() -> None:

chat_completion = await client.chat.completions.create(

messages=[

{'role': 'system', 'content': 'You are a developer assistant for the AI Studio training platform. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.'},

{'role': 'user', 'content': 'Hello, please introduce AI Studio'}

],

model="ernie-3.5-8k",

)

print(chat_completion.choices[0].message.content)

asyncio.run(main())5.4 Search Enhancement

Usage Scenarios

For scenarios requiring real-time information or the latest data, such as news event queries, literature retrieval, and tracking policy changes. Based on web search capabilities, the model can obtain real-time data and information to answer user questions more accurately in specific scenarios.

How to Use

Add the following web_search parameters to the request body to enable web search. The parameter descriptions are as follows:

| Parameter Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| enable | boolean | No | No | Whether to enable the web search feature |

| enable_trace | boolean | No | false | Whether to return traceability information |

| enable_status | boolean | No | false | Whether to return a search trigger signal in the response. If search is triggered, the first packet returns 'Searching', and delta_tag:search_status indicates this packet is a signal packet |

| enable_citation | boolean | No | false | Whether to include citation source superscripts in the response. Single superscript format example: ^[1]^, multiple superscript format example: ^[1][2]^ |

| search_number | integer | No | 10 | Number of documents to retrieve, range is [1~28] |

| reference_number | integer | No | 10 | Number of documents used for the large model's summary, range is [1~28] (must be ≤ search_num) |

Parameter Example:

{

"web_search": {

"enable": true,

"enable_citation": true,

"enable_trace": true,

"enable_status": true,

"search_num": 10,

"reference_num": 5

}

}Supported Models:

- ernie-4.5

- ernie-4.5-turbo

- ernie-4.0

- ernie-4.0-turbo

- ernie-3.5

- deepseek-r1

- deepseek-v3

Code Example:

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.0-turbo-8k",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Who is the men's singles table tennis champion of the 2024 Olympics"

}

]

}

],

extra_body={

"web_search": {

"enable": True,

"enable_trace": True

}

},

stream=True,

)

search_result = []

for chunk in completion:

if (len(chunk.choices) > 0):

if (hasattr(chunk, 'search_results')):

search_result.extend(chunk.search_results)

print(chunk.choices[0].delta.content, end="", flush=True)

unique_dict = {}

for item in search_result:

unique_dict[item["index"]] = item

print("\nReferences:\n")

for result in list(unique_dict.values()):

print(str(result["index"]) + ". " + result["title"] + ". " + result["url"] + "\n")5.5 Structured Output

Introduction

JSON is one of the most widely used formats for applications to exchange data in the world.

Structured output is a feature that ensures the model always generates a response that conforms to the JSON schema you provide, so users don't have to worry about the model omitting required keys or producing invalid enum values.

Some benefits of structured output include:

- Reliable type safety: No need to validate or retry improperly formatted responses

- Clear rejection: Model rejections based on safety can now be detected programmatically

- Simpler prompting: No need to use strongly-worded prompts to achieve consistent formatting

How to enable Control the generation of response content through the response_format field.

| Field | Data Type | Description |

|---|---|---|

| type | string | Specifies the format of the response content. Optional values: json_object: returns in json format, may not meet expectations; text: returns in text format, default is text; json_schema: returns in the format specified by json_schema |

| json_schema | object | json_schema format, please refer to JSON Schema description; this parameter is required when type is json_schema |

Supported Models

- ernie-4.5

- ernie-4.0-turbo

- ernie-3.5

Code Example

{

"model": "ernie-3.5-8k",

"messages": [

{

"role": "user",

"content": "Shanghai weather today"

}

],

"response_format": {

"type": "text" //Can be replaced with json_object, json_schema

}

}We can see that when the format setting is different, the returned content format changes:

- response_format not enabled

Since weather information is updated in real-time, I cannot directly provide the precise weather conditions for Shanghai today.\n\nTo get the latest Shanghai weather information, I recommend you check a weather forecast application, visit the official website of the meteorological bureau, or use other reliable weather information sources. These platforms usually provide detailed real-time weather data such as temperature, humidity, wind speed, precipitation probability, etc., as well as weather forecasts for the next few days.\n\nHope these suggestions are helpful to you!- response_format enabled

"{\n \"Shanghai today's weather\": \"Since I cannot obtain real-time weather information, I am unable to provide the exact weather conditions for Shanghai today.\"\n}\n\nTo get real-time weather for Shanghai today, I recommend you check the weather app on your phone, visit the official website of the meteorological bureau, or use other reliable weather information sources. These channels usually provide the latest weather conditions, temperature, humidity, wind speed, and other detailed information."5.6 Function calling

Capability Introduction

Function call is a feature that can connect large models with external tools or code. This feature can be used to enhance the inference effect of large models in application scenarios such as real-time data and data computation, or to perform other external operations, including tool-calling scenarios like information retrieval, database operations, graph search and processing, etc.

tools is an optional parameter in the model service API used to provide function definitions to the model. With this parameter, the model can generate function parameters that conform to the specifications provided by the user. Please note that the model service API does not actually execute any function calls. It only returns whether to call a function, the name of the function to be called, and the parameters required to call the function. Developers can use the parameters output by the model to further execute the function call in their system.

Supported Models

- ernie-x1-turbo-32k

- deepseek-r1

- deepseek-v3

Call Step Description

- Define the function using JSON Schema format;

- Submit the defined function(s) to the model that supports function call via the tools parameter; multiple functions can be submitted at once;

- The model will decide which function to use, or not to use any function, based on the current chat context;

- If the model decides to use a function, it will return the parameters and information required to call the function in JSON format;

- Use the parameters output by the model to execute the corresponding function, and submit the execution result of this function to the model;

- The model will give the user a reply based on the function's execution result.

Example Code

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

tools = [

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

}

]

messages = [{"role": "user", "content": "What's the weather like in Boston today?"}]

completion = client.chat.completions.create(

model="deepseek-v3",

messages=messages,

tools=tools,

tool_choice="auto"

)

print(completion)5.7 Print Chain of Thought (Thinking Model)

Non-streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

chat_completion = client.chat.completions.create(

messages=[

{'role': 'system', 'content': 'You are a developer assistant for the AI Studio training platform. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.'},

{'role': 'user', 'content': 'Hello, please introduce AI Studio'}

],

model="deepseek-r1",

)

print(chat_completion.choices[0].message.reasoning_content)

print(chat_completion.choices[0].message.content)Streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="deepseek-r1",

messages=[

{'role': 'system', 'content': 'You are a developer assistant for the AI Studio training platform. You are proficient in development-related knowledge and responsible for providing developers with search-related help and suggestions.'},

{'role': 'user', 'content': 'Hello, please introduce AI Studio'}

],

stream=True,

)

for chunk in completion:

if (len(chunk.choices) > 0):

if hasattr(chunk.choices[0].delta, 'reasoning_content') and chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end="", flush=True)

else:

print(chunk.choices[0].delta.content, end="", flush=True)5.8 Multimodality

5.8.1 Multimodal - Text Input

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.5-8k-preview",

messages=[

{

'role': 'user', 'content': [

{

"type": "text",

"text": "Introduce a few famous attractions in Beijing"

}

]

}

]

)

print(completion.choices[0].message.content or "")5.8.2 Multimodal - Text Input - Streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.5-8k-preview",

messages=[

{

'role': 'user', 'content': [

{

"type": "text",

"text": "Introduce a few famous attractions in Beijing"

}

]

}

]

)

for chunk in completion:

if (len(chunk.choices) > 0):

print(chunk.choices[0].delta.content, end="", flush=True)5.8.3 Multimodal - Image Input (url) - Streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.5-8k-preview",

messages=[

{

'role': 'user', 'content': [

{

"type": "image_url",

"image_url": {

"url": "https://bucket-demo-bj.bj.bcebos.com/pic/wuyuetian.png",

"detail": "high"

}

}

]

}

],

stream=True,

)

for chunk in completion:

if (len(chunk.choices) > 0):

print(chunk.choices[0].delta.content, end="", flush=True)5.8.4 Multimodal - Image Input (base64) - Streaming

import os

from openai import OpenAI

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# Path to your image

image_path = "/image_1.png"

# Getting the Base64 string

base64_image = encode_image(image_path)

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.5-8k-preview",

messages=[

{

'role': 'user', 'content': [

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

stream=True,

)

for chunk in completion:

if (len(chunk.choices) > 0):

print(chunk.choices[0].delta.content, end="", flush=True)5.8.5 Multimodal - Image + Text Input - Streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="ernie-4.5-8k-preview",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Which band is in the picture"

},

{

"type": "image_url",

"image_url": {

"url": "https://bucket-demo-bj.bj.bcebos.com/pic/wuyuetian.png",

"detail": "high"

}

}

]

}

],

stream=True,

)

for chunk in completion:

if (len(chunk.choices) > 0):

print(chunk.choices[0].delta.content, end="", flush=True)5.8.6 Multimodal - Video Understanding - Streaming

import os

from openai import OpenAI

client = OpenAI(

api_key=os.environ.get("AI_STUDIO_API_KEY"), # Environment variable containing AI Studio access token, https://aistudio.baidu.com/account/accessToken,

base_url="https://aistudio.baidu.com/llm/lmapi/v3", # aistudio LLM api service domain

)

completion = client.chat.completions.create(

model="default",

temperature=0.6,

messages= [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Describe this video"

},

{

"type": "video_url",

"video_url": {

"url": "https://bucket-demo-01.gz.bcebos.com/video/sea.mov",

"fps": 1

}

}

]

}

],

stream=True

)

for chunk in completion:

if (len(chunk.choices) > 0):

print(chunk.choices[0].delta.content, end="", flush=True)Notes:

- The large model is stateless with each call. You need to manage the information passed to the model yourself. If you need the model to understand the same image multiple times, please pass the image in every request.

- Supports single and multiple images. Each image size should not exceed 10MB. The total tokens for multiple image inputs should not exceed the model's context length. For example, for the ERNIE-4.5 model, the image input should not exceed 8K tokens.

- Image formats:

a. Image base64: JPG, JPEG, PNG, and BMP types. The format passed must be: data:image/;base64, b. Public image url: Supports JPG, JPEG, PNG, BMP, and WEBP types

6. API Code Error Codes

| HTTP Status Code | Type | Error Code | Error Message |

|---|---|---|---|

| 400 | invalid_request_error | malformed_json | Invalid JSON |

| 400 | invalid_request_error | invalid_model | model is empty |

| 400 | invalid_request_error | malformed_json | Invalid Argument |

| 400 | invalid_request_error | malformed_json | 返回的具体错误信息 |

| 400 | invalid_request_error | invalid_messages | 返回的具体错误信息 |

| 400 | invalid_request_error | characters_too_long | the max input characters is xxx |

| 400 | invalid_request_error | invalid_user_id | user_id can not be empty |

| 400 | invalid_request_error | tokens_too_long | Prompt tokens too long |

| 401 | access_denied | no_parameter_permission | 返回的具体错误信息 |

| 401 | invalid_request_error | invalid_model | No permission to use the model |

| 401 | invalid_request_error | invalid_appid | No permission to use the appid |

| 401 | invalid_request_error | invalid_iam_token | IAM Certification failed |

| 403 | unsafe_request | system_unsafe | the content of system field is invalid |

| 403 | unsafe_request | user_setting_unsafe | the content of user field is invalid |

| 403 | unsafe_request | functions_unsafe | the content of functions field is invalid |

| 404 | invalid_request_error | no_such_model | |

| 405 | invalid_request_error | method_not_supported | Only POST requests are accepted |

| 429 | rate_limit_exceeded | rpm_rate_limit_exceeded | Rate limit reached for RPM |

| 429 | rate_limit_exceeded | tpm_rate_limit_exceeded | Rate limit reached for TPM |

| 429 | rate_limit_exceeded | preemptible_rate_limit_exceeded | Rate limit reached for preemptible resource |

| 429 | rate_limit_exceeded | user_rate_limit_exceeded | qps request limit by APP ID reached |

| 429 | rate_limit_exceeded | cluster_rate_limit_exceeded | request limit by resouce cluster reached |

| 500 | Internal_error | internal_error | Internal error |

| 500 | Internal_error | dispatch_internal_error | Internal error |